In deep learning, fine-tuning is a way to use a pre-trained model and adjust it to work on new tasks. Fine-tuning builds on the information the model already learned from earlier training instead of starting from scratch.

You can fine-tune the whole model or just a few layers. When fine-tuning only certain layers, the rest are “frozen,” meaning they don’t change during training. Another option is to add small components, called “adapters,” that have fewer settings than the whole model. With this approach, only the adapters are adjusted, while the rest of the model stays frozen. This can make fine-tuning faster and more efficient.

For some types of models, like convolutional neural networks (often used in image processing), it’s common to freeze the early layers because they focus on simple patterns, like edges or textures. Later layers capture more complex details and can be adjusted for the new task.

Most pre-trained models have learned from large sets of general data, which makes them great starting points. Usually, they are fine-tuned by adding a new layer for a specific task, trained from scratch, while keeping the other layers intact. Fine-tuning the whole model can lead to better results but requires more computing power.

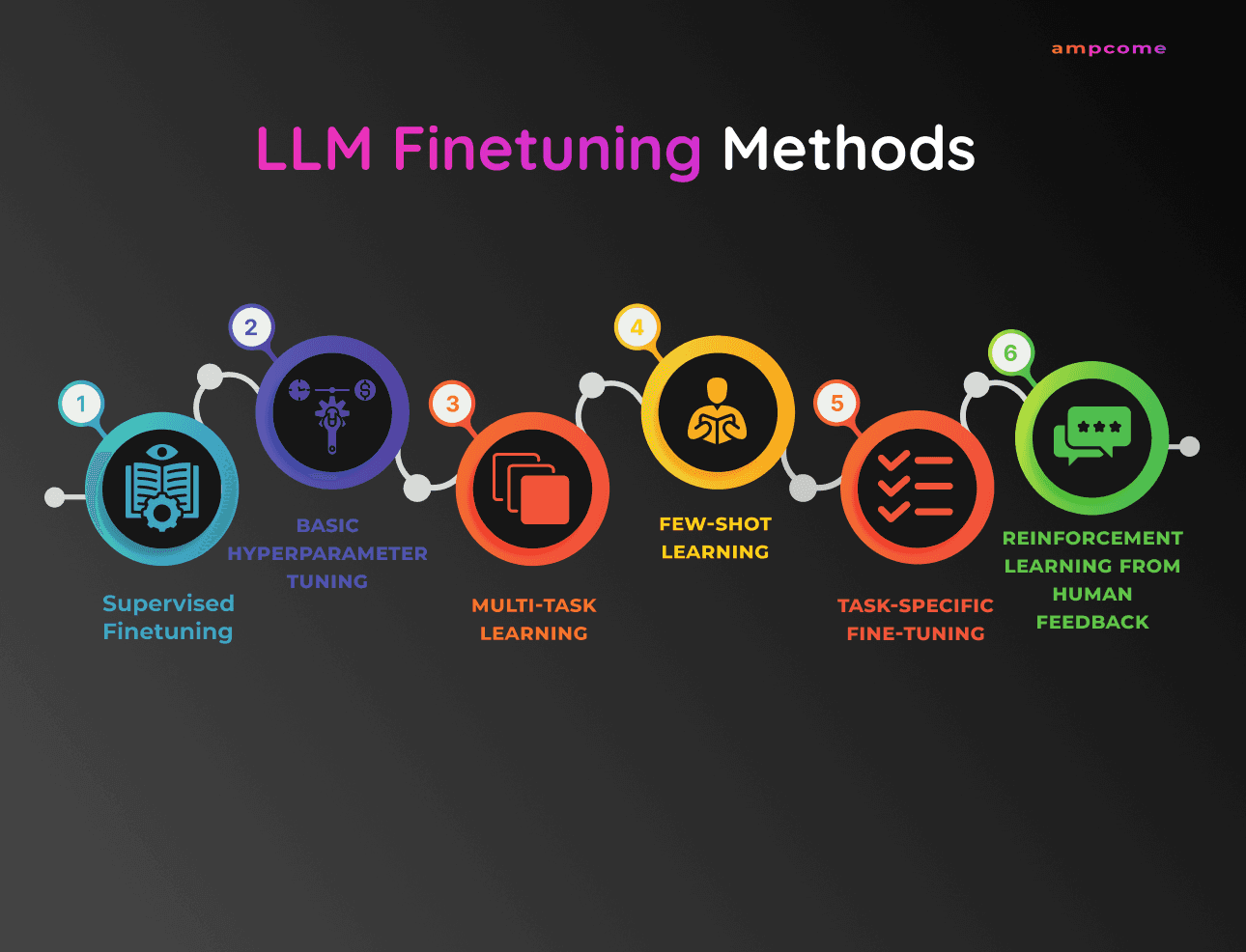

Fine-tuning is typically done with supervised learning, where data comes with correct answers to guide the model. But it can also be done with weak supervision, which uses limited or indirect data. For language models, fine-tuning can also include feedback from real users. For example, ChatGPT is a fine-tuned version of GPT-3, and it learns from user feedback to improve responses.